Sometimes we need to extract information from websites. We can extract data from websites by using there available API’s. But there are websites where API’s are not available.

In this Web Scraping tutorial: Grasp Python Web Scraping fundamentals. Use BeautifulSoup & Requests to scrape & crawl Craigslist with Python. Learn how to save your scraped output to a CSV / Excel file. Understand the difference between Web Scraping Python libraries and frameworks. So let's start your Web Scraping real-life project. Beautiful Soup is a pure Python library for extracting structured data from a website. It allows you to parse data from HTML and XML files. It acts as a helper module and interacts with HTML in a similar and better way as to how you would interact with a web page using other available developer tools.

Here, Web scraping comes into play!

Python is widely being used in web scraping, for the ease it provides in writing the core logic. Whether you are a data scientist, developer, engineer or someone who works with large amounts of data, web scraping with Python is of great help.

Without a direct way to download the data, you are left with web scraping in Python as it can extract massive quantities of data without any hassle and within a short period of time.

In this tutorial , we shall be looking into scraping using some very powerful Python based libraries like BeautifulSoup and Selenium.

BeautifulSoup and urllib

BeautifulSoup is a Python library for pulling data out of HTML and XML files. But it does not get data directly from a webpage. So here we will use urllib library to extract webpage.

First we need to install Python web scraping BeautifulSoup4 plugin in our system using following command :

$ sudo pip install BeatifulSoup4

$ pip install lxml

OR

$ sudo apt-get install python3-bs4

$ sudo apt-get install python-lxml

So here I am going to extract homepage from a website https://www.botreetechnologies.com

from urllib.request import urlopen

from bs4 import BeautifulSoup

We import our package that we are going to use in our program. Now we will extract our webpage using following.

response = urlopen('https://www.botreetechnologies.com/case-studies')

Beautiful Soup does not get data directly from content we just extract. So we need to parse it in html/XML data.

data = BeautifulSoup(response.read(),'lxml')

Here we parsed our webpage html content into XML using lxml parser.

As you can see in our web page there are many case studies available. I just want to read all the case studies available here.

There is a title of case studies at the top and then some details related to that case. I want to extract all that information.

We can extract an element based on tag , class, id , Xpath etc.

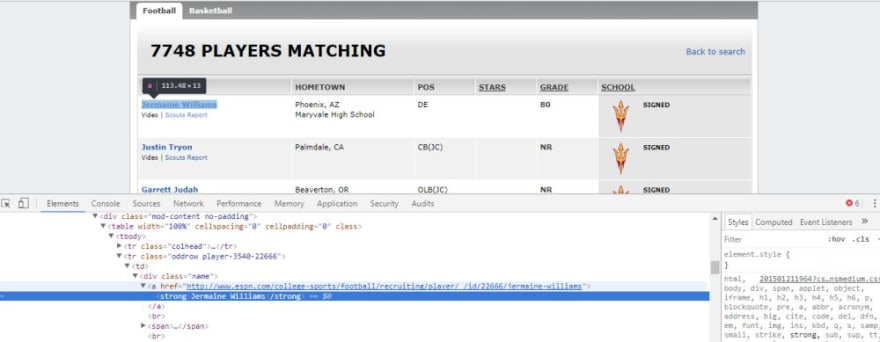

You can get class of an element by simply right click on that element and select inspect element.

case_studies = data.find('div', { 'class' : 'content-section' })

In case of multiple elements of this class in our page, it will return only first. So if you want to get all the elements having this class use findAll() method.

case_studies = data.find('div', { 'class' : 'content-section' })

Now we have div having class ‘content-section’ containing its child elements. We will get all <h2> tags to get our ‘TITLE’ and <ul> tag to get all children, the <li> elements.

case_stud.find('h2').find('a').text

case_stud_details = case_stud.find(‘ul’).findAll(‘li’)

Now we got the list of all children of ul element.

To get first element from the children list simply write:

case_stud_details[0]

We can extract all attribute of a element . i.e we can get text for this element by using:

case_stud_details[2].text

But here I want to click on the ‘TITLE’ of any case study and open details page to get all information.

Since we want to interact with the website to get the dynamic content, we need to imitate the normal user interaction. Such behaviour cannot be achieved using BeautifulSoup or urllib, hence we need a webdriver to do this.

Webdriver basically creates a new browser window which we can control pragmatically. It also let us capture the user events like click and scroll.

Selenium is one such webdriver.

Selenium Webdriver

Selenium webdriver accepts cthe ommand and sends them to ba rowser and retrieves results.

You can install selenium in your system using fthe ollowing simple command:

Web Scraping With Python

$ sudo pip install selenium

In order to use we need to import selenium in our Python script.

from selenium import webdriver

I am using Firefox webdriver in this tutorial. Now we are ready to extract our webpage and we can do this by using fthe ollowing:

self.url = 'https://www.botreetechnologies.com/'

self.browser = webdriver.Firefox()

Now we need to click on ‘CASE-STUDIES’ to open that page.

We can click on a selenium element by using following piece of code:

self.browser.find_element_by_xpath('//div[contains(@id,'navbar')]/ul[2]/li[1]').click()

Now we are transferred to case-studies page and here all the case studies are listed with some information.

Here, I want to click on each case study and open details page to extract all available information.

So, I created a list of links for all case studies and load them one after the other.

To load previous page you can use following piece of code:

self.browser.execute_script('window.history.go(-1)')

Final script for using Selenium will looks as under:

And we are done, Now you can extract static webpages or interact with webpages using the above script.

Conclusion: Web Scraping Python is an essential Skill to have

Today, more than ever, companies are working with huge amounts of data. Learning how to scrape data in Python web scraping projects will take you a long way. In this tutorial, you learn Python web scraping with beautiful soup.

Along with that, Python web scraping with selenium is also a useful skill. Companies need data engineers who can extract data and deliver it to them for gathering useful insights. You have a high chance of success in data extraction if you are working on Python web scraping projects.

If you want to hire Python developers for web scraping, then contact BoTree Technologies. We have a team of engineers who are experts in web scraping. Give us a call today.

Consulting is free – let us help you grow!

Web Scraping means navigating structured elements on a website, and deeply going to next layers. Incoming big data will be retrieved and formated in desired styles. We apply Python BeautifulSoup to a simple example for scraping with step-by-step tutorials.

All codes here are not complicated, so you can easily understand even though you are still students in school. To benefit your learning, we will provide you download link to a zip file thus you can get all source codes for future usage.

Estimated reading time: 10 minutes

EXPLORE THIS ARTICLE

TABLE OF CONTENTS

BONUS

Source Code Download

We have released it under the MIT license, so feel free to use it in your own project or your school homework.

Download Guideline

- Prepare Python environment for Windows by clicking Python Downloads, or search a Python setup pack for Linux.

- The pack of Windows version also contains pip install for you to obtain more Python libraries in the future.

What Is Web Scraping

THE BASICS

Python Web Scraping

Web pages are written using HTML to be structured texts. Therefore, people can obtain organized information of websites by scraping. Because Python is good at that, we will introduce its library BeautifulSoup in the article.

What is BeautifulSoup?

BeautifulSoup version 4 is a famous Python library for web scraping. In addition, there was BeautifulSoup version 3, and support for it will be dropped on or after December 31, 2020. People had better learn newer versions. Below is the definition from BeautifulSoup Documentation.

BeautifulSoup Installation

If you already download and setup Python and its tool pip in Windows or Linux, you can install BeautifulSoup 4 package bs4 by pip install command lines, and then check the result by pip show.

Scraping An Example Website

We should choose an example website to start. Focusing only on the content about laptop computer, thus the portion of an Indian shopping website, Snapdeal Laptops, will be suitable to our target.

There are two layers, top layer for a product list and bottom layer for details in specification. For safety, we suggest a process of saving pages and then retrieve data from them. Therefore, the page contents along with images are downloaded. Below is the running process.

Next, you will learn how to scaping web pages by BeautifulSoup. The result will be saved as JSON format in a file named result.json.

STEP 1

Finding How Web Pages Link

The previous section let us know what type of data we will meet, so we need to inspect these HTML structured texts to find entry points for BeautifulSoup scraping to start.

Finding The Relationship

Probably, the website you want to crawl has more layers than that in this example. As there is no general rule for various web pages, it is better to get a entry point that BeautifulSoup can start from by finding how web pages link.

Below lists the JSON-styled data related to a specific laptop computer. Except that the fields under 'highlight' is found from layer 2, other fields come from layer 1. For example, 'title' indicates the product name with brief specification, and 'img_url' can be used to download product pictures.

What to Inspect in Layer 1

From the view of HTML document, let us continue inspecting Layer 1 in file laptop.html. In other words, by going through HTML structured text, BeautifulSoup can locate the key feature of class='product-tuple-image' for scraping items like <a pogId=, <a href=, <source srcset=, and <img title= to be pogid, href, img_url, and title, respectively. Where href directs to url in Layer 2 for that product.

Similarly, with another feature of class='product-tuple-description', BeautifulSoup can continue scraping <span product-desc-price=, <span product-price=, and <div product-discount= to retrieve JSON fields price, price_discount, and discount. So far, all items in Layer 1 have been discovered.

What to Inspect in Layer 2

Further, BeautifulSoup traverses the HTML file of Layer 2 such as 638317853217.html. As mentioned previously, that is for detailed specification of laptops. The task in Layer 2 can be done by scraping items inside the feature of class='highlightsTileContent '.

STEP 2

Scraping By Beautifulsoup

Before scraping, we got to introduce a popular Python library PyPI requests to get contents from websites. Then, BeautifulSoup will perform iteratedly in layers to convert all information into JSON-style data.

PyPI requests

PyPI requests is an elegant and simple HTTP library for Python. In the following paragraph, we leverage it to read text from web pages and save as HTML files. Of course, you can install it by issuing pip install requests in command box.

PyPI requests requests.get() not only get web pages, but also pull down binary data for pictures like that in download_image().

Python Scraping in Layer 1

Let us start from getLayer_1() in which each web page has been saved before BeautifulSoup parsing proceed.

Saving contents as backup is helpful for debuging. Once exceptions happens while BeautifulSoup scraping, it is hard for you to find again the exact content you need from massive web pages. In addition to that, too frequent data scraping may trigger prohibition of some websites.

For BeautifulSoup, the very first expression like soup = BeautifulSoup(page, 'html.parser') need to select one kind of parsers. The parser could be html.parser or html5lib, whose difference can be found in Differences between parsers.

Based on understanding about what the text structure is in STEP 1, we find prices from the class of product-tuple-description by using attrs={} with which BeautifulSoup anchors a lot of locations in this example. Apart from prices, pictures are done in the same way.

Layer 1 can discover data about titles, images, and prices. Importantly, you should notice that BeautifulSoup uses find_all() or find() to gather information for all pieces or one piece, like the usage in database.

Use Beautifulsoup For Web Scraping

For each page in Layer 2, 'highlight': getLayer_2() is called iteratedly to retrieve more. Finally, json.dump() save JSON-formated data as a file. Subsequently, let us go through steps for BeautifulSoup scraping in Layer 2 in next paragraph.

Python Scraping in Layer 2

Like the way in Layer 1, getLayer_2() find more product details by locating the class of highlightsTileContent. Then, in a loop, it store searched data in an array. You can check what is in this array in JSON style as discussed in STEP 1.

STEP 3

Handling Exception

BeautifulSoup scraping won’t be smooth continuously, because the input HTML elements may be partial missing. In that case, you have to take actions to avoid interrupt, and keep the entire procedure going on.

Why Will Exceptions Probably Occur?

In laptop.html, if every product has identical fields, there will be no exception. However, when any product has less fields than that of other normal products, it could happen like what the following Python scripts express.

Always, you have to deal with every kind of exception, so as to prevent the scrapying process from interrupt. Imaging that programs suddenly exit due to errors after crawling several thousands of pieces, the loss won’t be little.

What Exceptions to Handle

Here list only two conditions. However, you may encounter extra ones when scrapying more and more web pages.

KeyError means the HTML element to search for does not exist. You may have been aware of an alternative HTML element to replace with, thus in this example, you can replace find('img')['src'] with find('source')['srcset']. However, if no alternation, just ignore it.FINAL

Conclusion

We didn’t write detailed skills in BeautifulSoup Documentation, but show many opinions and directions about scraping in Python. Therefore, if you are not familiar with skills, please refer to online resources for practices.

Thank you for reading, and we have suggested more helpful articles here. If you want to share anything, please feel free to comment below. Good luck and happy coding!

Suggested Reading

- 4 Practices for Python File Upload to PHP Server

- Compare Python Dict Index and Other 5 Ops with List